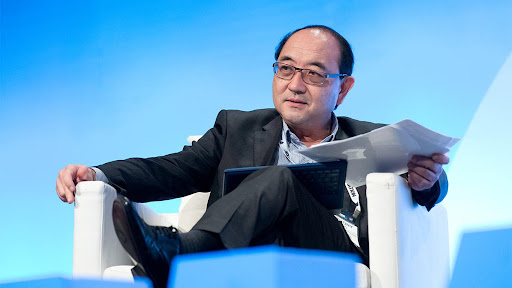

Written by Anthony Wong, President of IFIP, CIO, Lawyer and Futurist

© Homepix Photography | Anthony Wong, President of IFIP, CIO, Lawyer and Futurist

In recent months, we have been awed by the public releases of new AI-generative technologies such as ChatGPT, DALL-E and Stable Diffusion. These technologies are shifting AI mainstream exponentially — and taking the interactions between humans and machines to new heights, with new frontiers to access knowledge, and the ability to generate works of art, poems, stories, codes and more.

The list of generative AI technologies grows longer by the day, including the recent launch of ChatGPT rival, Bard, which caused the share price of Google’s parent company – Alphabet – to plummet after the provision of inaccurate data.

While generative AI has embarked humanity on an era of new creativity and productivity, it has also opened a pandora’s box of controversies. These range from the generation of malicious and fake news stories, amplification of demographic stereotypes and biases, unfair competition, to the use of copyrighted content to train generative AI models without permission, to name a few.

In this short article, we look at the debate in relation to the use of copyrighted content to train generative AI models.

Many artists have spoken out against the use of their artwork, while others have found generative AI to be a great assisting tool. While there are many facets to the debate, over the last few months, a number of legal actions have been filed against generative AI companies including Stability AI in the High Court of Justice in London, in California and against GitHub, Microsoft and Open AI.

In brief, some of the allegations are:

- That content including photographs, images, artwork and text have been copied to train generative AI models without permission or compensation.

- That the legal rights of creators who posted code or other work under open-source licenses on GitHub have been violated when their code or work have been used for the training of generative AI models.

- Unfair competition.

If successful, these legal actions could hamper the progress and development of generative AI, including restricting the use of copyrighted content as training data. On the other hand, a win for the generative AI companies could mark a turning point for the law of copyright and allow the use of copyrighted content to advance generative AI. Even so, we should also consider the role of ethics and its implications.

For many years, I have been an advocate for technology innovation – on the many benefits that technologies and AI could contribute to the progress of humankind. As a technologist, I have been advocating for text-data mining (TDM) exceptions under our copyright laws. Countries including US, EU, UK, Singapore and Japan have provided some forms of TDM exceptions – allowing machine learning to use copyright-protected content.

However, I have been increasingly concerned during the course of my work and interactions at WSIS, IGF, LAWASIA and on “Battle for Control and Use of Data”, whether:

- Generative AI are taking advantage of the fruits of human labour? Have we upset the apple cart?

- We have the right sense of fair-play, recompense or attribution for the use of content.

- The rationales and assumptions on copyright have been undermined including on concepts of ownership, attribution and creativity?

- We should explore new laws for AI.

Currently, it is far from clear whether copyrighted content may be used to train generative AI models without permission.

From this brief exploration, it is clear that the values and issues above will benefit from much broader debate and consultation—as for many, it is a matter of perspective and a balancing of legitimate interests.

This article was originally published on the UNCTAD website